Trends

No Consenus, No Hierarchy of Prague: Progress or Pandemonium?

No Consensus, No Hierarchy at Prague: Progress or Pandemonium?

Sten Westgard, MS

October 2023

“Reality does not shape theory, but rather the reverse. Thus power gradually draws closer to ideology than it does to reality; it draws strength from theory and becomes entirely dependent upon it…. It then appears that theory itself, ritual itself, ideology itself, makes decisions that affect people, and not the other way around.”

- Vaclav Havel, The Power of the Powerless

Prague, a gorgeous city with an Imperial past, a city that has seen different regimes of oppression over the centuries, a city home to authors Franz Kafka, Milan Kundera, and Vaclav Havel, played host to the latest CELME conference, promoted and engineered to be the successor to the Milan Conference.

The Prague conference began and ended with an anti-proclamation: There will be no Prague Consensus, no new Prague Hierarchy to replace Milan. The Milan Consensus still stands as the accepted model for performance specifications. The focus of Prague was to show practical applications of performance specifications.

The meeting that happened in between those statements, meanwhile, did much to unravel the consensus that came before.

Prelude: a short dictionary of misunderstood words

To the layman, Quality Goals and Quality Requirements are synonymous, as are Performance Specifications. Not so, in the realm of Laboratory Quality. The most concrete outcome of Prague is that Goals have been redefined as something aspirational, ideal, but possibly not achievable. Goals are nice, but Requirements and Specifications are mandatory. Performance Specifications, which has temporarily been the primary term, may be giving way to Performance Requirements.

For the purposes of this article, however, we will use terms interchangeably, just to frustrate the nit-pickers.

The unspeakable totality of error

“[T] moment someone breaks through in one place, when one person cries out, ‘The emperor is naked!’ – when a single person breaks the rules of the game, thus exposing it as a game – everything suddenly appears in another light and the whole crust seems then to be made of a tissue on the point of tearing and disintegrating uncontrollably.”

- Vaclav Havel, The Power of the Powerless

In the wake of Milan 2014 – the last major conference on performance specifications, one that issued the subsequent 2015 Milan Consensus, which is essentially the Stockholm Consensus, Squished – a number of “Task-and-Finish Groups” TFG) s were established. The idea from the Milan organizers was to have small groups tackle the difficult issues. TFG-TE tackled the format of a performance specification, and sought to find common ground in the debate over measurement uncertainty (MU) and analytical total error (variously called TE, TEa, TAE, or ATE). The TFG-TE published its report in Clin Chem Lab Med 2018; 56(2): 209–219:

“The aim of the present paper is to fulfill the task of the TFG-TE: to present a proposal on how to use the TE concept and how to possibly combine measures of bias and imprecision in performance specifications. The theoretical and practical underpinning of TAE and uncertainty methods are presented as groundwork for future consensus on their use in practical performance specifications.”

….

“TAE methods for quantifying the quality of measuring systems and defining performance specifications are widely used in laboratory medicine. They are appropriate for analyzing data from single measurement results in proficiency testing schemes and may constitute a basis for the calculation of performance.”

….

“Therefore, it seems prudent to continue the use of TAE methods and when appropriate replace them with MU calculations when the latter offer proven advantages.”

As an attendee of the Milan meeting, a member of the TFG-TE and co-author of the paper, I felt the outcome endorsed a coexistence of the two models: MU and TAE can be used by laboratories, as they prefer. MU was preferred by some, but users of TAE weren’t under threat of excommunication.

For the speakers of Prague, however, the time to abandon TE is NOW. The topics were skillfully chosen and presentations carefully designed to avoid nearly all mention of Total Error. For the committee driving the Quality conferences, Total Error seemed to be the concept that must not be named.

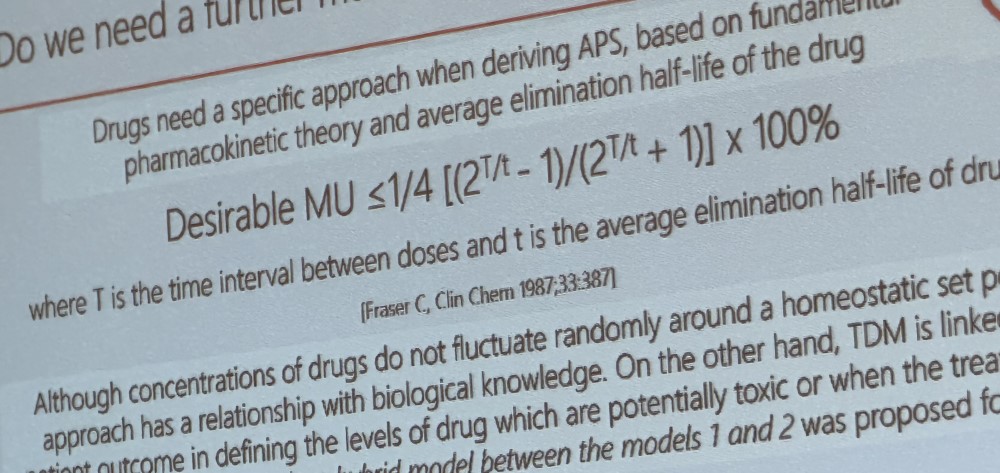

This omerta on Total Error included a bizarre rewriting of history. Past accomplishments of the Quality conferences, which had formerly specified allowable total errors, were now claimed to have been calculating measurement uncertainty all along. This picture here shows the work of Dr. Callum Fraser from 1987, determining allowable measurement uncertainty.

We all acknowledge the greatness of Dr. Fraser’s work, but to claim that in 1987 he was calculating specifications for an approach that did not yet exist – that exhausts plausibility.

The unachieveable assumptions of the clinical model

“The true opponent of totalitarian kitsch is the person who asks questions. A question is like a knife that slices through the stage backdrop and gives us a look at exactly what lies hidden behind it.”

― Milan Kundera, The Unbearable Lightness of Being

Milan’s Model 1 is Clinical. That is, the best performance specifications come from clinical outcome studies or discussions with clinicians on the use of the tests. Or they can be derived through simulation studies that estimate the impact of changes in test results on the resulting diagnoses or treatments.

The first part of the conference was devoted to explaining how Clinical goals are almost impossible to achieve. First, clinicians are unfamiliar with the language of performance specifications, so they are not very good at suggesting limits of X precision or Y bias, much less Z uncertainty or (unspoken total error). If you ask them how much error is allowable, or uncertainty is permissible, they often answer, “None.”

Secondly, in the experience of the Milan Task Finishing Group that tackled Clinical Model goals, the simulation approach degrades effectively into “assumption shopping.” Simulations will generate different goals, based primarily on the assumptions baked into the model (the model of the simulation, not the Model of the Milan hierarchy).

Later in the conference, Dr. Graham Jones noted that it was genuinely impossible to create a Clinically based performance specification, since they are invariably built on a foundation of the State of the Art performance. A clinician’s experience is based on the current method performance; they cannot imagine a better assay and further predict how that would change their interpretations of results. Similarly, any simulation to make a clinical specification is generated in the shadow of current method performance, often directly feeding on that method performance.

Having essentially eliminated Model 1 from practical use, the conference moved on.

The unpredictable variability of the biological variation database

“Correct understanding of a matter and misunderstanding the matter are not mutually exclusive.”

― Franz Kafka, The Trial

Model 2 is biological variation. The greatest achievement since Milan has been the establishment of the EFLM Biological Variation database, which now houses hundreds of biological variation studies and makes them available to all faiths, both Total Error and Measurement Uncertainty. You can visit, see an analyte, and the database will helpfully calculate desirable (or minimum or optimal) imprecision, desirable bias, desirable total allowable error, and maximum allowable measurement uncertainty.

But the database is hardly carved in stone, and Dr. Sandberg made some dramatic proposals to change it. He noted that current specifications of minimum, desirable, and optimal are in some ways, arbitrary, and a more rational approach might make estimations for something more akin to a 10th, 50th, and 90th percentile. If such a change was enacted, he refused to confirm that the old set of specifications would remain, or that access to that data would remain available to users.

Dr. Sandberg further noted that the bias specifications generated from biological variation estimates are partially derived using a clinical outcome model. Therefore, the bias specification is also something that might possibly be revised in the future.

At multiple times throughout the conference, Dr. Panteghini vehemently insisted that the Biological Variation database should not contain estimates of allowable total error. Wisely, Dr. Sandberg did not commit to removing Total Error specifications from the EFLM biological variation database. He reasoned that when people come into your store wanting to buy sausages, you need to have sausages on your shelves, otherwise, they’ll shop elsewhere. A tacit admission that the primary and most popular use of the biological variation database remains as a source of Total Error format performance specifications. But Dr. Sandberg did not unequivocally commit to maintaining anything in the database.

For Dr. Panteghini, the only worthy use of the biological variation database is to generate measurement uncertainty specifications. All other uses, particularly Total Error specifications, are wrong, and should be eliminated, perhaps prohibited. Thus, the database should be an exclusive temple to uncertainty.

So visit the EFLM biological variation while you can, it might change significantly in the future.

The unwieldy extemporaneousness of SOTA

“The only proper approach is to learn to accept existing conditions.”

― Franz Kafka, The Trial

One final criticism of the Milan and Stockholm model was that the 3rd Model, State of the Art, was not a model at all. There is no scientific theory that builds state of the art. State of the Art just exists. It is not driven by models, nor theories, but instead really by engineering and competition. Breakthroughs in technology drive the SOTA forward. The pressures of the marketplace drive innovation further still.

There were murmurs that the SOTA should be set by the best 20% of laboratories. For assays with great performance, this might generate a tyranny of the elite, forever shrinking the allowable targets. On the other end of the scale, this approach immediately sets the field up for an 80% failure rate.

Thus, by the end of 2 days, the conference had eliminated Model 1, suggested major modifications to Model 2, and had “de-modeled” Model 3. What does that leave us?

The idiosyncratic individuality of laboratories

“Living within the lie can constitute the system only if it is universal. The principle must embrace and permeate everything. There are no terms whatsoever on which it can coexist with living within the truth, and therefore everyone who steps out of line denies it in principle and threatens its entirety.”

― Milan Kundera, The Unbearable Lightness of Being

Dr. Graham Jones, again stole the show with an homage to Monty Python’s Life of Brian, “All laboratories are individuals.” By this he meant that each laboratory implements its own individual approach to quality specifications and quality control. It should be assumed, therefore, that anything a laboratory is doing is something different than any other laboratory, unless evidence proves to the contrary. He then demonstrated this by describing the bespoke regimen his own laboratory enforces. Later, Dr. Jones was followed by Elvar Theodorsson, who also presented another completely unique application of goals, rules, and runs. If these two are any example of how laboratories enact QC, then every laboratory is blazing its own trail, contrary to any hierarchy, consensus statement, or directive put forth by Stockholm, Milan or Prague.

The unbearable persistence of bias

“One does not have to believe everything is true, one only has to believe it is necessary.”

― Franz Kafka, The Trial

The weakness of the MU model comes down to how it treats bias. There have been three stages of MU bias that have been proposed over the years.

- Bias cannot be allowed to exist. It must be corrected

- Bias can be ignored when it’s small enough

- Bias is not really bias. It’s imprecision. Bias can be included in MU calculations as another variance, along with uncertainties about any corrections for bias.

For various reasons, none of these approaches is satisfying. Any contact with bench-level reality of routine laboratory operations finds:

- Bias exists. And biases don’t always get corrected.

- Bias exists and at times is not small and not possible to ignore.

- Bias is not a standard deviation or a variance. It is most often encountered when it happens in one direction, not both.

The speakers of Prague dismiss these arguments as naïve. But any glance at an EQA survey, or a peer group comparison program, immediately reveals that the bias of a method is not equal to the standard deviation of the method. It seems incredible that there’s even an argument about the existence, or direction, of bias. But for MU to succeed, this magic act must take place. It is deemed necessary that bias must be a varance. Therefore, we must, with a wave of a wand, or the convolution of logic, transform a bias into a standard deviation.

One argument is that biases must be observed over an extended period of time in order to transform them into standard deviations. Over 6 months, years, over the long run, biases may turn out to be variances. But to paraphrase the economist Keynes, “In the long run, all our patients are dead.”

These MU assertions not only fly in the face of common sense, but it turns out they are embraced only by a tiny minority of laboratories. A survey of Italian laboratories, presented by Dr. Cerriotti, found that only 5% of laboratories calculate measurement uncertainty for all their measurands, 71% of labs do not calculate MU, and 27% of labs don’t even know how to calculate MU. This, in Europe, where MU is held supreme. In the US, in contrast, the use of measurement uncertainty is practically zero percent, and the words “measurement uncertainty” are essentially unknown.

For those of you who wish, you can contribute to our global survey on measurement uncertainty here. We will have a webinar on the results in December 2023 or December 2024.

In other words, despite dominating the agenda of the Prague conference, MU is not winning over converts. It triumphs in the rarified air of academic ivory towers and conference organization. Through ISO 15189 coercion, they force labs to calculate MU, but the labs barely comply. Most labs do nothing more than complete a minimal calculation and subsequently ignore the number. For most, MU merely remains a compliance nuisance.

Indeed, the august past president of the IFCC and now head of the Global Lab Quality program was in attendance at Prague, but tellingly, he admitted that measurement uncertainty, while calculated in his laboratory, was of no practical use. It was calculated, shown to inspectors, then ignored.

The unpredictable progress of Prague

“Judgement does not come suddenly; the proceedings gradually merge into the judgement.”

― Franz Kafka, The Trial

This review glosses over much of the discussions held in Prague. Of course, there is bias present – it exists here! – in our discussion of the debate over bias. But for those wanting more details, be patient: a Special Issue of Clinical Chemistry and Laboratory Medicine will publish all of the conference speakers presentations. Undoubtedly, an editorial will accompany it, with undoubtedly a significantly different perspective.

At the very end of Prague, a multitude of proposals were aired. A list of 100 analytes to be published, with performance specifications for all three levels (optimal, desirable, minimum? Or 10th, 50th, 90th percentile? It was not decided). It was unanimously urged that the diagnostic manufacturers should provide more information, particularly the uncertainty of there reference assignments, and also, by Dr Graham Jones, their lot release criteria. The EQALM organization was suggested as a lever to standardize performance specifications. Expansion of the EFLM database to include SOTA and clinical outcome goals was also floated. Multiple performance specifications for the same analyte were proposed. Not only could performance specifications for different clinical uses be created for the same analyte, but performance specifications could be created for different physical locations of clinical use for the same analyte.

It was further noted that the conference would be summarized and recommendations generated by a meeting that followed the conference. Again, as with Milan, the meeting where decisions are made did not happen at the conference. The true recommendations will come out of private meetings, a closed circle, not the actual conference itself, nor will the attending scientists themselves get a voice in it.

There will necessarily be a future conference on quality goals, or specifications, or requirements. Stay tuned.