Trends

Quality in the Spotlight: An Antwerp Accord?

After a hiatus of 2 years, the 2016 Quality in the Spotlight conference in Antwerp reconvened at a critical time. Some of the contentious issues confronting the Milan meeting were given broader discussion and a hopeful consensus appears to be forming.

Quality, Get Thee to a Nunnery!

Sten Westgard, MS, and James O. Westgard, PhD

April 2014

In Act 1, Scene 3 of Hamlet, the young Dane is confronted by his former girlfriend Ophelia. She has been charged by her father to get more information out of Hamlet, but this sudden eagerness is quickly detected by the suspicious Hamlet. Angrily, he lashes out at her:

In Act 1, Scene 3 of Hamlet, the young Dane is confronted by his former girlfriend Ophelia. She has been charged by her father to get more information out of Hamlet, but this sudden eagerness is quickly detected by the suspicious Hamlet. Angrily, he lashes out at her:

“Get thee to a nunnery. Why wouldst thou be a breeder of sinners? I am myself indifferent honest, but yet I could accuse me of such things that it were better my mother had not borne me. I am very proud, revengeful, ambitious, with more offences at my beck than I have thoughts to put them in, imagination to give them shape, or time to act them in. What should such fellows as I do crawling between earth and heaven? We are arrant knaves, all. Believe none of us. Go thy ways to a nunnery.”

We could not help but remember this scene as the 17th Quality in the Spotlight conference took place in Antwerp, Belgium, in, of all places, a former nunnery dated to the 12th century. The conference center once housed nuns who worked in the hospital next door, but now is a hotel and convention hall. Where once prayers were offered up to God, we instead engaged in the latest round of debate about measurement uncertainty and total analytical error.

Outside of the academics who are interested in error models, and the metrologists who seek pure measurement, this debate is rather confounding to the typical laboratorian. The typical lab is not so worried whether a glass is half full or half empty – they just know that the turnaround time on those glasses needs to meet customer expectations. Labs are, in a very real sense, crawling between earth and heaven. They are required by ISO 15189 and exhorted by metrologists to adopt the most heavenly model of measurement uncertainty. Yet a majority of labs merely comply with that regulation and make no practical use of MU – they calculate it, put the results in a folder, shove the folder in a drawer, and go on about the rest of their day. The earth they have to crawl around in is one that is plagued by imprecision and even, yes, it’s true, bias. Bias which according to metrologists is a mortal sin which must be purged, eliminated, exorcised from the testing world. Nevertheless, bias remains and persists, e.g., the last 2015 CAP/NGSP survey of HbA1c noted that of 28 methods tested, 12 of them had significant bias problems. That means nearly half of all HbA1c methods have significant bias! What does one do in this case? You need a model that accounts for bias – and that’s where the impure but practical model of Total Analytical Error is useful. This model has been used for more than four decades now, and while imperfect, it is a sturdy tool for labs, PT and EQA programs, and provides a framework upon which we can build a QC system.

The Antwerp conference was a chance to discuss both models and propose solutions, or at least, compromises. It’s becoming clear that however impure the total analytical error model is, it does provide a practical tool that is not provided by measurement uncertainty. Similarly, measurement uncertainty provides critical information about the traceability and comparability of methods that cannot be accounted for by the total error approach. Labs need both MU and TE. Ignoring or eliminating either of these approaches leaves the laboratory impoverished.

EFLM Past President Mauro Panteghini provided new insight into how the measurement uncertainty approach should hold manufacturers more accountable for their assays. Fully half of any measurement uncertainty budget should be assigned to and managed by the manufacturer. The other half of the budget comprises the components of variation that occur directly because of the laboratory conditions. What I found interesting in the discussion was the fact the the measurement uncertainty goals presented were derived from the Ricos database, which of course was built in part to estimate allowable total error. Dr. Panteghini’s models only provide a budget for the allowable imprecision, which is taken from the desirable imprecision specification from the Ricos database. So while this measurement uncertainty approach is novel in the way that it breaks down the budget into smaller parts, it’s still essentially the same budget that is used by the allowable total error approach. The proposed Panteghini partitioning still relies on the assumption that bias is non-existent, corrected, or ignored.

Incoming EFLM president Dr. Sverre Sandberg, always notable for his candor, reviewed the Milan Consensus and noted the document represented a different, modern kind of consensus process - where a draft by the organizers is presented top-down to the participating audience, instead of a bottom-up consensus built by the audience. In that sense, it’s also worthwhile to note that the Stockholm Consensus wasn’t a true consensus process, either. Again, it was an opinion paper written by a few individuals that was then presented as a consensus. Perhaps the fact that the hierarchy didn’t exclude any parties or positions helped ensure its general acceptability. There was hardly any controversy about the Stockholm consensus once it was released, so perhaps the spirit of consensus was truly present in that document.

Incoming EFLM president Dr. Sverre Sandberg, always notable for his candor, reviewed the Milan Consensus and noted the document represented a different, modern kind of consensus process - where a draft by the organizers is presented top-down to the participating audience, instead of a bottom-up consensus built by the audience. In that sense, it’s also worthwhile to note that the Stockholm Consensus wasn’t a true consensus process, either. Again, it was an opinion paper written by a few individuals that was then presented as a consensus. Perhaps the fact that the hierarchy didn’t exclude any parties or positions helped ensure its general acceptability. There was hardly any controversy about the Stockholm consensus once it was released, so perhaps the spirit of consensus was truly present in that document.

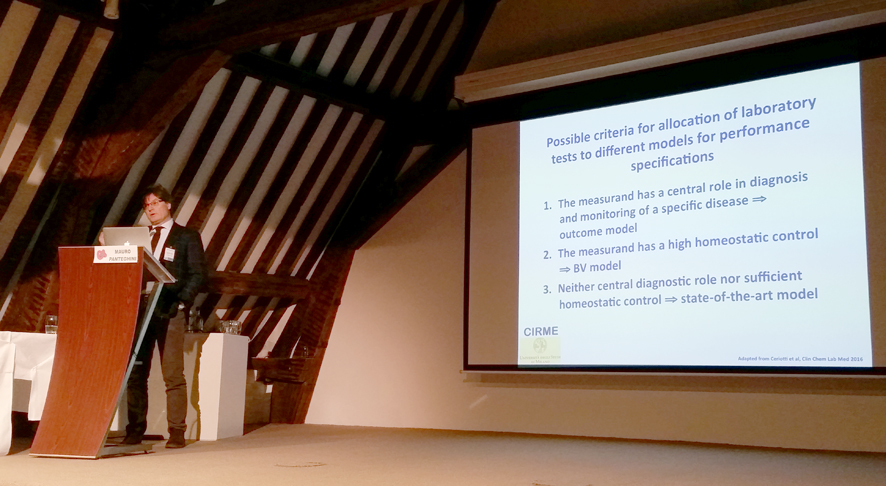

It should be reiterated that the main Milan Consensus is nothing radical. Narrowing 5 levels of hierarchy into basically 3 levels is not a ground-shaking modification. The larger changes are in all the details: the assignment of assays to most suitable models, the revision of the Ricos goals, and, our favorite topic, the debate of the the form or model of the performance specifications. This was not directly debated in Milan but erupted in the discussions of the Task Finishing Group on Total Error.

Dr. Rainer Haeckel provided perhaps the first model that truly calculates an independent target measurement uncertainty, a model which must be generated by spreadsheet and will vary from level to level and reference range to reference range. However, it is notable that when the model is used, admittedly on the most simplistic level, the results of the Haeckel evaluation on an example set of performance data are nearly identical to a Sigma-metric analysis of the same data using Ricos goals. That is, the existing Total Error Sigma-metric model reaches the same conclusions as the target measurement uncertainty model. If true, we have been arguing about approaches but not noticing we reached the same destination. The Total Analytical Error model may be simplistic but it suffices. The target measurement uncertainty model may provide the same answers as the Total Error model, but it requires specialized calculations that have only just become available this year. Will we find any benefit in replacing a current simple calculation with a new complex calculation that must be implemented into software, middleware, instrumentation, when there are no new conclusions that result?

As for the talks discussing the strengths of the Total Analytical Error model and the shortcomings of measurement uncertainty, there was not a great deal of "new" news to report. Total Analytical Error, despite its lack of metrological imprimateur (No ISO standard mandates its use, unlike Measurement Uncertainty), is still the de facto dominant approach used by laboratories to manage. For more than 4 decades, it has been how laboratories managed the quality of their methods. In a highly topical analysis, the performance of the laboratory testing of the embattled Theranos start-up has beens compared against Total Analytical Error goals.

In a later talk, Dr. Jan Krouwer pointed out that the word “total” may be perhaps a misnomer for Total Analytical Error. It is not an absolute total. It does not account for all error in all parts of the testing process, including the pre-pre-analytical, pre-analytical, post-analytical, and post-post-analytical. While this is true for one definition of total, it may be helpful to acknowledge that there is another, relative definition of total. When bias and imprecision are combined, there is a total that results. It may not be “the” total, but it is “a” total. So when the “Total Analytic Error” model was introduced back in the 1970s, it was a broader and more encompassing estimate of error than the previous separate estimates of imprecision and bias. It was not intended to be the ultimate, all-encompassing measurement of error that today’s metrologists seek. However, there are clinical total error models that are available that take into account more of these pre-analytical factors – it’s just the case that most labs don’t want longer, larger, more complex equations. They are content with “Total Analytic Error” as it currently stands. This lack of pure totality is not a sin unique to Total Analytical Error, however. Measurement uncertainty is also built on a model that focuses only on the 95% confidence interval for random error. More salient to this point is the ISO 15189 requirement that labs calculate measurement uncertainty for the analytical process only - the standard does not require that MU be calculated in a way that includes pre-analytical and post-analytical uncertainties.

All of this, as they say, is deep in the weeds. There are but a handful of people in the world who are truly heavily invested in the differences between the models and the scope of the application. A vast majority of labs probably don’t have the time nor the inclination to engage on a topic which may only generate minor differences in laboratory operations.

Nevertheless, we should not dismiss these debates as trivial. From these discussions come the decisions that shape the foundations of laboratory quality management. We are truly grateful to Dr. Henk Goldschmidt who organized the Quality in the Spotlight conference and provided a forum where all sides were invited and encouraged to speak, where differences could be debated, and ultimately, accommodation and accord may be reached. When the conferences meets again in two years, I imagine we will have much more to discuss.