Basic QC Practices

The QC we really do

In 2011, David Housley, Principal Biochemist of the Luton and Dunstable Hospital NHS Foundation Trust, and his colleagues prepared an audit of the Internal QC (IQC) practices in laboratories across the United Kingdom. They have graciously agreed to share the results of this audit.

June 2011

Dr David Housley, Principle Clinical Biochemist

Dr Danielle Freedman, Consultant Chemical Pathologist

Dept of Clinical Biochemistry

Luton and Dunstable Hospital

Lewsey Road, Luton, LU4 0HX, UK.

Teresa Teal, Consultant Clinical Biochemist

Biochemistry Dept

Royal Hampshire County Hospital

Romsey Road

Winchester, Hampshire, SO22 5DG, UK

with Sten Westgard, MS

Back in 2008, The North Thames Audit and Quality Assurance Group did an unusual thing: they audited the QC practices of their laboratories. Through a questionnaire, they assessed the IQC practices in 54 laboratories in part of the United Kingdom. Their results were published in an article the Annals of Clinical Biochemistry:

Audit of internal quality control practice and processes in the south-east of England and suggested regional standards. David Housley, Edward Kearney, Emma English, Natalie Smith, Teresa Teal, Janina Mazurkiewicz and Danielle B Freedman. Ann Clin Biochem 2008; 45: 135–139. DOI: 10.1258/acb.2007.007028 (subscription required)

related discussion:

What Labs Really Do...

What Labs Really Do, Part Two

Not content with the first audit, Dr. Housley and colleagues expanded their audit to cover the entire United Kingdom. Generously, they have agreed to share the results with us and the website members.

- What control rules do laboratories use?

- How do laboratories set control limits?

- What do laboratories do with an out-of-control flag?

- Dr. Housley's comments

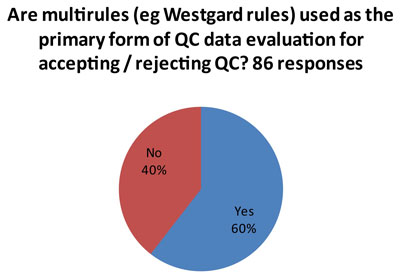

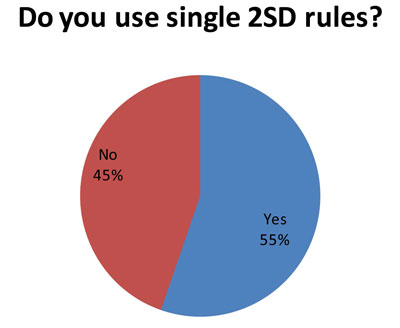

What control rules do laboratories use?

That's the good news.

This isn't such good news. (And it may explain some of the more troublesome findings later in the audit.) Since 85 laboratories answered this question, and 86 laboratories answered the first question, it indicates that many laboratories that use both "Westgard Rules" and 2s control rules. So some analytes get the "Westgard Rules" while others get 2s control limits.

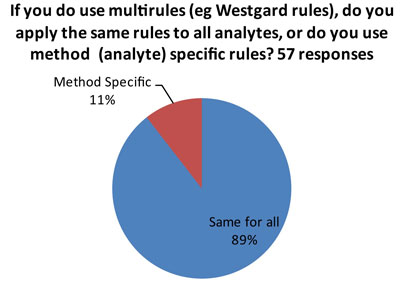

The ideal answer is that "Westgard Rules" are applied in a method-specific manner, so that all the rules aren't used on all tests. There are many tests on the market of such high quality (analytical performance) that they don't require "Westgard Rules" for QC. So if a laboratory applies "Westgard Rules" in a blanket fashion, there could be instances of "over-QC."

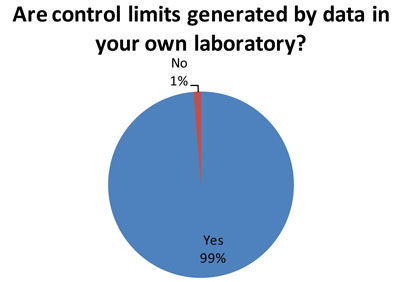

How do laboratories set control limits?

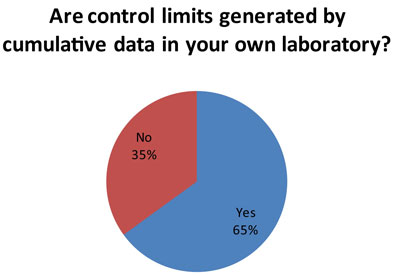

Again, this is the good news. Out of 79 responding laboratories, 78 indicated they use their own data to set control limits. Best Practice is for a laboratory to determine its mean and SD based on its own data.

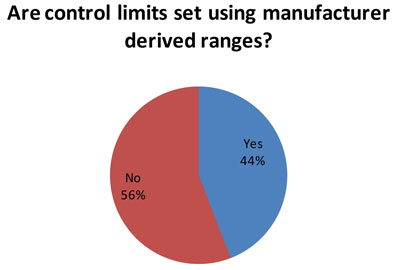

(59 responses to this question) Nevertheless, for many laboratories, the manufacturer ranges are often used to set control limits. The reason this could be bad is that the typical manufacturer range is very wide, and the SD from that range is too wide for an individual laboratory to use.

This is more good news. A majority of laboratories (60 responses to this question) are using long-term data on imprecision to set their control limits. This aligns the control limits with the actual performance of the instrument. In this way, control limits can be set accordingly to the specific local conditions and performance of each test.

What do laboratories do with an out-of-control flag?

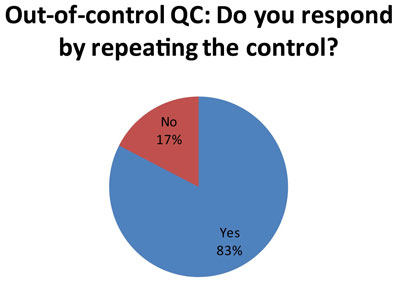

Here's one of the more unhappy revelations. Probably stemming from the use of 2s control limits, which has a high false rejection rate, many laboratories are responding to an out-of-control result by repeating the control (57 laboratories out of 69 responses) . See Repeated, Repeated, Got Lucky to understand why this might not be the best response.

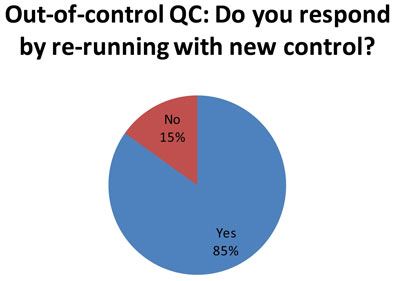

Here is equally distressing news. When a simple repeat doesn't bring controls back "in", many labs (62 of 73 responses) try to see if a new control will fall "in." See Doing the Wrong QC Wrong for advice on why that, too, is not an ideal response.

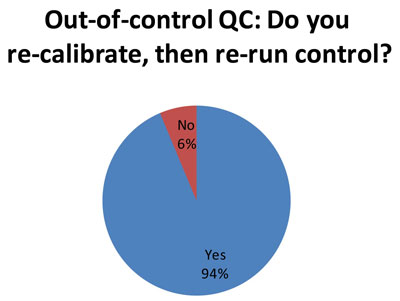

74 out of the responding 79 laboratories indicate that they recalibrate after an out-of-control flag. This is an appropriate response to an out-of-control flag. It represents actual trouble-shooting, rather than just trying a "do over" with repeated or new controls. What is worrisome, however, is that we know many of these laboratories in the audit are using 2s control limits, which generate a substantial number of false rejections. Thus, the re-calibration may only be caused by the 2s limits, so the trouble-shooting is really only chasing ghosts. It would be better for laboratories to perform QC Design, which allows them to adapt the QC procedures to fit the quality required by the test and the perfomance observed the method. Then you only get an out-of-control result when there's a real problem, and you only re-calibrate when there's a true need to recalibrate. [See an interesting note of caution about this practice]

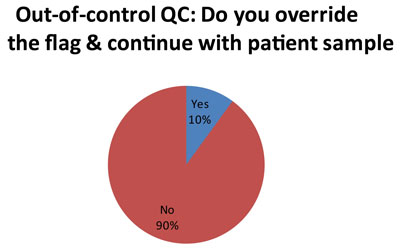

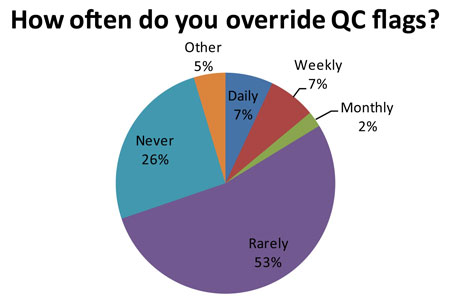

Here is perhaps the most troubleing finding. A minority of laboratorie (5 out of 50 responses), at a certain point, simply over-ride the QC flags and continue testing with patient samples. Now we don't get much explanation in the audit as to how or why each laboratorty makes this decision. But we can guess that for laboratories using 2s control limits, they get tired of repeating results and simply decide to ignore certain flags. The audit did probe further about this practice, which has been termed "non-ideal QC."

Out of 84 responding laboratories, 14 of them (16.7%, or about 1 in 6 labs) regularly ignore their own QC. Now that number may be artificially high because of the false rejection rate caused by 2s limits. "Ignore" may also be too strong a word - the audit uses the term "over-ride." But essentially these laboratories have lost trust in their own QC system. There is a danger that the more these labs over-ride a QC flag, the more acceptable that practice becomes. Eventually, it may be acceptable to over-ride all QC flags, even when there is a real problem occurring.

Dr. Housley's commentary

My UK profession at present remain committed to trying to improve quality. This audit is designed to emphasise that what we believe in does not get implemented in practice. Auditing IQC practice within a lab is important, it tells us what is being done (and isn't) and the audit process can serve as a supplementary tool to support traditional IQC techniques.

One of the problems with the QC audit standards as they stand is that we have not indicated strongly enough what we believe acceptable levels of practice are - in a quantifiable way that allows people to truely measure their performance against the standards.

I am of the opinion that most labs in theory are happy to quote that they have a rigorous IQC programme, but seem to be naive or unaware of the realities about how poor the programmes actually are. It is difficult to truly understand why something everyone apparently believes in is practiced so poorly. I hope the audit begins to shed some light on where the issues lie so that we can address them.

For me the major observations from the audit are:

- Some of the free text responses [not shown in this article to preserve confidentiality] in the audit suggest poor basic knowledge. I am not convinced that everyone who said they use single 2SD rules is actually doing so, they just do not understand what they do.

- Combinations of poor planning, poor knowledge and variable confidence in methodology means there is very wide variation in practice, but it is worrying that people feel that increased frequency of IQC is the answer rather than looking at what they are doing. This has significant global cost implications.

- In the UK we still tend at present to run on-call services, ie, only acute emergency work is run at night and weekends. For some reason we allow ourselves to practice different IQC when we have an acutely ill emergency patient at night, compared to a well person health check done during the day.

- The mentality when IQC fails is still that it must be the IQC that is wrong and not the method, ie, we are convinced that all IQC rejections are false. This perpetuates the practice of repeating ad infintium until you get a value that is in range.

- It is worrying that labs are allowing our most junior and least experienced members of staff to make fundamental decisions about acceptance or rejection, and most depressingly about whether we should continue to process patient samples. I am not aware of previous mention in the literature about IQC seeming to sit with junior staff on a day to day basis, and senior staff having a more managerial rather than operational input into IQC.

- Many labs globally are increasingly working as part of networks or more formal multi-site services. There seems to be a lack of clarity on how performance between sites should be monitored, if at all.